Table of Contents

Process Synchronization

The task of process synchronization is achieved in operating systems with the help “Shared Memory“.

Question: What is Shared Memory?

Answer: It is defined as the region in main memory (RAM) which is being accessed by two or more than two processes and concurrent read and writes are performed on that shared section of memory.

So, as of now, we understood what is process synchronization and how it is achieved in operating systems. But there are several problems related to this shared memory concept and we are going to discuss them next.

There are two cases of processes running in a system and trying to access a shared region:

Processes -> not running in parallel

When this is the case, then there occurs no problem with shared memory concept in process synchronization, because a process can simply access the shared memory without worrying about the thing that there might be some other process currently accessing and reading/writing the data to that portion of memory.

Processes -> running in parallel

In this case, there are several problems that need to be discussed:

First: One process is reading and one is reading at the same time from shared memory

Process P1 is writing to file “my-file” which is currently opened in shared memory and in the meantime process P2 wants to read data from the same file by accessing that shared memory, then, as a result, P2 may read more or less written data from the file.

Second: Both (or more) the processes are trying to write the data to the same file in shared memory:

Process P1 is writing to “my-file” which is currently opened in shared memory and in the meantime process P2 also wants to write some data to the same file then, in this case, it becomes very complicated to know that what process’s content needs to be saved first.

Note: Only a part of a program which is currently executing in main memory is being accessed by different processes in the same time and that part is known as Critical Section.

Question: What is a critical section?

Answer: When a part of a program which is currently executing in main memory (and now becomes a process, technically) is being accessed by other processes, then that part is defined as a critical section. One more thing, it is not necessary that the critical section is always a part of a program, it can also be a shared variable residing in the shared memory.

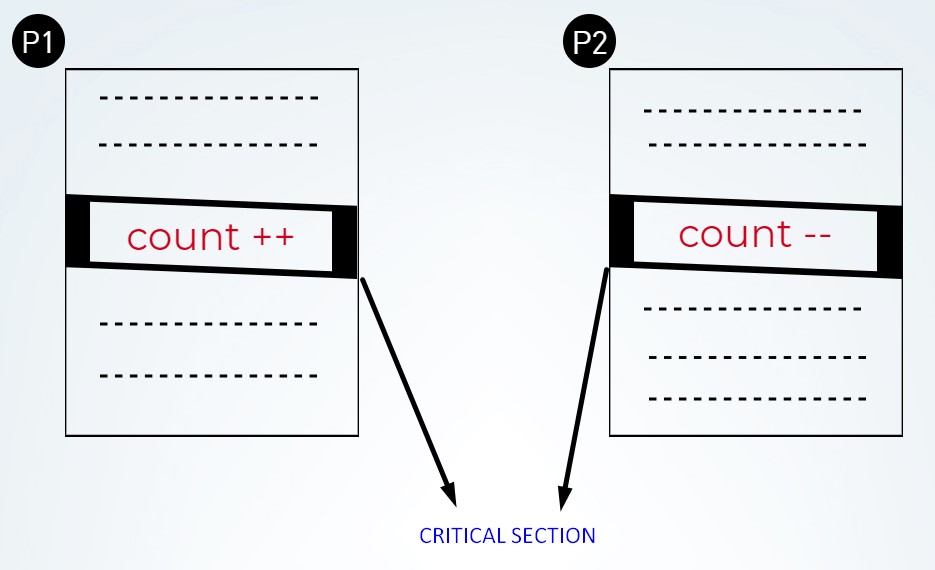

Let us now make it crystal clear with the help of an example.

Example: On Shared Variable (count)

From the picture shown it should be clear that there are two processes (P1 and P2) that are currently accessing a shared variable named as “count”.

Now, in this case, let us say that the code for both P1 and P2 is as follows:

Explaining the not so assembly code from both the processes:

MOV = moving the data of count variable to Ro (register).

INC = incrementing the data of R0 (register value to +1)

DEC = decrementing the data of R0 (register value to -1)

Next, by not taking so much time let us explain the whole example now:

If say, the value of “count = 5” and the execution of accessing it by P1 and P2 is as follows

P1: line 1, line 2 [preempt] P2: line 1, line 2, line 3 [preempt] P2: line3

At the end of this execution in parallel, the final value of count is “count = 6”.

Now, this may seem good, but in reality, this method again rises to a bigger problem of Race Condition.

Question: What is Race Condition?

Answer: When the final answer depends upon the order of execution of processes executing the statements to the critical section or shared variable.

Note: This problem of Race Condition also needs to be removed.

Question: How to achieve that and remove the race condition problem?

Answer: This can be removed with the help of Process Synchronization Techniques.

Process Synchronization Techniques

There are two types of synchronization techniques:

1. With Busy Waiting (wastage of CPU time)

If a process P1 is in Critical Section then if a second process P2 wants to enter to the critical section then, in this case, P2 has to wait for the completion of P1 and this waiting of a process is known as Busy Waiting.

2. Without Busy Waiting (no wastage of CPU time)

If a process P1 is in Critical Section then if a second process P2 wants to enter to the critical section then, in this case, P2 does not have to wait for process P1 and this is synchronization technique without busy waiting.

Requirements for Synchronization Techniques OR Mechanisms

There are a total of four(4) mechanisms and they are divided into primary and secondary:

Primary

- Mutual Exclusion: It says that at a particular time only one process can enter into the critical section.

- Progress: One process cannot stop another process for entering into the critical section.

Secondary

- Bounded Waiting: If more than one process wants to enter into the critical section then one process will give chance to another process after taking a finite number of chances itself.

- Portability OR Architectural Neutrality: The solution or the mechanism should be independent of architecture.

Now, following are techniques:

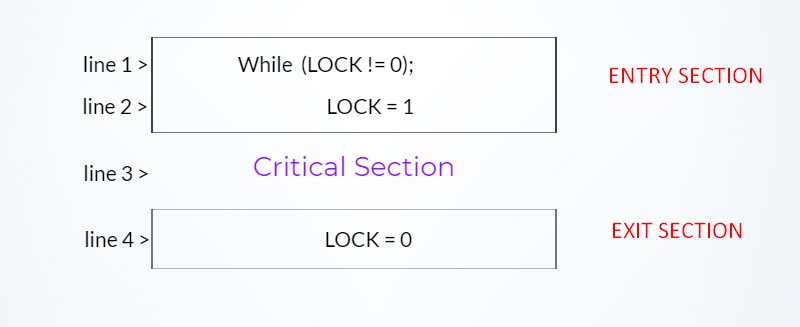

Lock Variable -> LOCK (Mechanism 1)

This mechanism is implemented is “user mode” i.e. everything is written in the program no need for the Operating System level interfere. It can be a “busy waiting solution” and can be used for more than 2 processes.

Example: Let the initial value of LOCK = 0 (zero) and let us consider that

LOCK = o -> Vacant Critical Section

LOCK = 1 -> Occupied Critical Section

Let the following program is as shown in the diagram and the execution of statements is:

P1: line 1, line 2, line 3 [preempt] P2: line 1 [preempt] P1: line 4 [preempt] P2: line 1, line 2, line 3

Problems and Dis-Advantages of “Lock Variable” mechanism

- No mutual exclusion = Because there is nothing like a lock in the real implementation, it is just a theoretical concept.

- Failure method.

- we are not going to use this method at all.

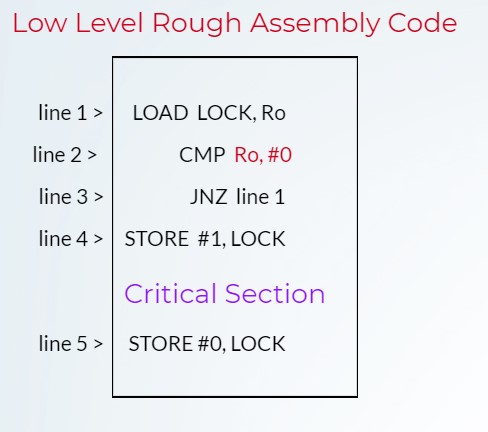

Question: In terms of code, How many processes can enter a critical section at the same time?

Answer: In order to understand this we have to convert the above given high-level language code into the following assembly code or rough low-level language code.

So, as shown in the diagram above if the preemption takes place in between line 1 and line 2 then the problem arises.

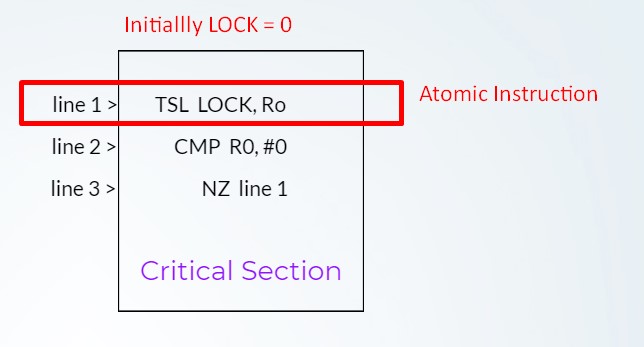

Question: How to solve this problem that “if a process is in the critical section and the program is using a LOCK variable concept then how to stop other processes from entering to the critical section while one is already in the critical section”?

Answer: This can be solved by making the lines: line 1 and line 2 atomic that means make them execute as a single atomic statement and that instruction is TSL (test set lock).

Now, whichever process runs the TSL statement first and enters the critical section first then no other process can enter to the critical section until the first one which executed the TSL first is going to come out of the critical section.

Following the order of execution of statements is a solution-proof to the problem

P1: line 1 [preempt] P2: line 1, line 2, line 3 ………

Because by implementing the TSL statement, only one process can see/set the LOCK = ‘o’.

But still there are few problems left and LOCK variable mechanism was not able to fulfil the following requirements:

- Bounded Wait: As other processes have to wait for the process which executed the TSL first.

- Architectural Independent.

Note: There is still one problem “Priority Inversion”.

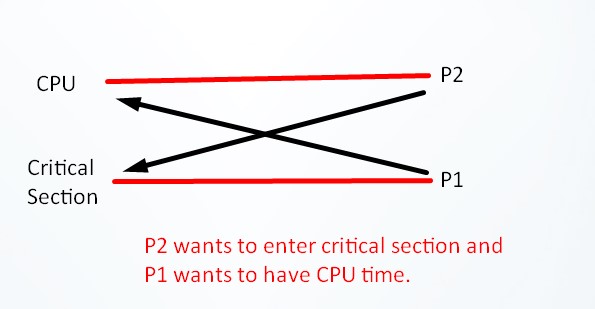

Question: What is the Priority Inversion problem?

Answer: It can be explained well with the help of the following example:

Example :

Example Explanation :

Firstly, scheduler schedules process P1 to use the CPU and then P1 enters/executes TSL instruction and after that, it is in the critical section, but in the meantime, a process P2 with higher priority than the P1 is scheduled by the scheduler in order to give it access of CPU. Now, because the CPU is still with P2 now and it cannot enter/execute the TSL either because there is still the value of LOCK = 1 set by P1 and it will not change to ‘0’ until the P1 comes out of critical section and it is because of our synchronization mechanism and in order to come out of the critical section, P1 wants the CPU but the access to CPU is currently with P2 because of its higher priority. And in contrast, P2 can not able to enter/execute the TSL in order to get into critical section because of the synchronization method and P1 is in the critical section. As a result, it is DEADLOCK between P1 and P2 and more specifically it is a SPIN DEADLOCK.

Disabling Interrupts (Mechanism 2)

Let’s consider two processes named P1 and P2. Now in order to get into the critical section, P2 will generate/raise user-level interrupts while the P1 is in the critical section and because of those interrupts the scheduler looks at that interrupt generated by high priority process P2 (it can one) and then try to give the CPU control to process P2 via kernel.

Note: This method is implemented on the hardware level.

Solution

A good solution to remove all this deadlock generating situations is to disable that interrupt generation completely until one process which is now in the critical section will come out of it.

Problem with the solution

The problem with the above solution is that we should never give the interrupt control to the user-level process. Because it may be dangerous in a way that a user-level process will keep on working in the critical section and will never going to give chance to other process and which leads to STARVATION.

Problems and Dis-Advantages of “Disable Interrupts” mechanism

- Mutual Exclusion is there in this mechanism (approved).

- Progress is also there.

- The bounded wait is a problem here.

- Architecture Independent.

Turn Variable OR Strict Alteration Approach (Mechanism 3)

This is a software level solution and is implemented at user-level or at user-mode. We do not need any help from the Operating System, because everything is implemented at user-level.

Note: This solves the problem of busy waiting but note one thing, every busy waiting solution is going to waste CPU time.

It is a two process only which means this approach only works for a total of two processes only.

Question: How is it different from LOCK VARIABLE solution?

Answer: In LOCK VARIABLE whenever LOCK = “0” then P1 and P2 both can enter to the critical section and when the value of LOCK = “1” then no one is able to enter to the critical section. However, in this approach, P1 and P2 both are going to enter into the critical section with a different value of TURN VARIABLE for example, when TURN = “0”, P1 is going to enter into the critical section and when TURN = “1”, P2 is going to enter into critical section and this is the difference between Lock Variable and Turn Variable.

Problems and Dis-Advantages of “TURN VARIABLE” mechanism

- Mutual Exclusion is there.

- Progress is still a problem.

- The bounded wait is removed.

- Architectural Independent.